FedNaWi: Selecting the Befitting Clients for Robust Federated Learning in IoT Applications

Image credit:

Unsplash

Image credit:

Unsplash

Abstract

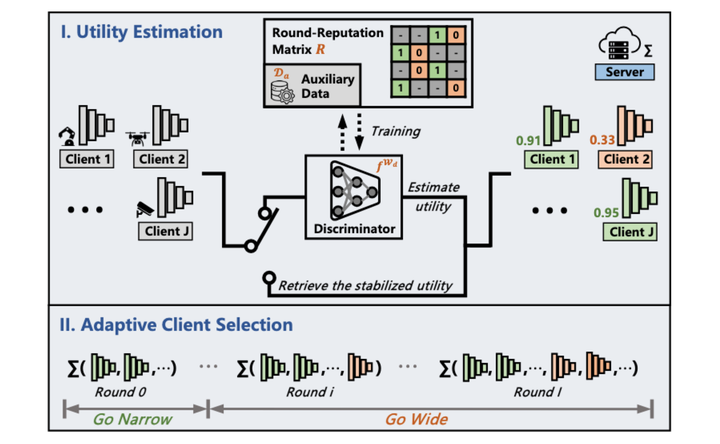

Federated Learning (FL) is an important privacy-preserving learning paradigm that is expected to play an essential role in the future Intelligent Internet of Things (IoT). However, model training in FL is vulnerable to noise and the statistical heterogeneity of local data across IoT clients. In this paper, we propose FedNaWi, a “Go Narrow, Then Wide” client selection method that speeds up the FL training, achieves higher model performance, while requiring no additional data or sensitive information transfer from clients. Our method first selects reliable clients (i.e., going narrow) which allows the global model to quickly improve its performance and then includes less reliable clients (i.e., going wide) to exploit more IoT data of clients to further improve the global model. To profile client utility, we introduce a unified Bayesian framework to model the client utility at the FL server, assisted by a small amount of auxiliary data. We conduct extensive evaluations with 5 state-of-the-art FL methods, on 3 IoT tasks and under 7 different types of label and feature noise. We build an FL testbed with 38 IoT nodes (20 nodes run on Raspberry Pi 4B and 18 nodes run on Jetson Nano) for the evaluation. Our results show that FedNaWi improves the FL accuracy substantially and significantly reduces energy consumption. In particular, FedNaWi improves the accuracy from 35% to 75% in the non-IID Dirichlet setting, and reduces the average energy consumption by 55%.